Ask any musician, and they’ll tell you: The worst part about performing music is the practice — scheduling rehearsals, setting up, waiting, practicing, tearing down. It’s the least glamorous part of the job.

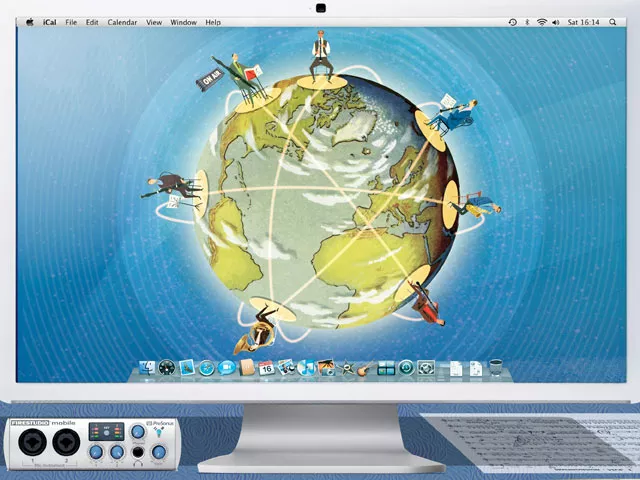

But what if, instead of schlepping yourself and your instruments around, you could simply turn on your computer, wave hello to your bandmates and get cracking on that opening passage to “Symphony of Evisceration in E-flat”?

Steve Simmons is working on it.

For six years, Simmons has toiled with Eastern Washington University students and faculty — along with Stanford University — to develop technology that would allow for seamless, ripping-fast audio and video connectivity between musicians in different parts of the world. They call it the Metropolitan Area Network Optimized Music Environment (or MANOME) project.

It’s harder than it sounds. Just open up a Chatroulette session and see for yourself. Yes, those are the sagging genitalia of an aging Ukrainian grocer, but while you may be able to see him (all too clearly) and carry on a conversation with him, those actions and that voice originated around the world several seconds ago. If you were to try to hum a few bars of the six-minute long “Oi-Ukrainy” anthem in unison, you would find it nearly impossible to get in synch.

“You can’t do it over the regular, ordinary, plain vanilla [Internet] because the delay time is just too big,” says Simmons, a 40-year veteran at Eastern. “It’s too slow and too choppy and too erratic.”

But slow commercial Internet connections are only part of the problem. The team has also had to deal with computers that don’t have enough power, operating systems that waste too much energy on unimportant functions and hardware that can’t relay the signal fast enough.

They’ve gone about this in what’s called the “spiral method” — or how, Simmons says, J.K. Rowling went about writing the Harry Potter series. “She did not produce a huge monster outline of every single book and chapter and paragraph and then write in all the details,” he says. “She wrote the first one and thought about it and decided what to do next.”

The MANOME team’s Goblet of Fire moment came last spring, when they hosted a public performance in Cheney. In a recital hall on one end of campus were an electric cello, Spokane Symphony cellist John Marshall and about a hundred other people. Above Marshall hung a huge screen showing the video likeness of Chris Chafe, MANOME’s Stanford partner, cradling another electric cello in the computer science building across the EWU campus. Connecting them was a thick band of the school’s “green,” research-only, super-high-speed network cable.

The video was still hopelessly delayed — you could tell, as Simmons sat in the computer science building, addressing the crowd in the recital hall. But the team had reduced the latency of the audio to almost nothing. So when Marshall set his bow to the opening bars of “Frere Jacques” and Chafe pitched in, the result was electric. The duo went on to perform about an hour of avant-garde jazz and classical music — in two separate buildings, in perfect unison.

“It went brilliantly well,” says Simmons. “The audio was terrific. We were on the international map as music researchers.”

In the aftermath, though, they scratched their heads over the latency in the video. Marshall said that while onstage, he had to keep from looking at his monitor because Chafe’s delayed movements would throw off his own timing. They hadn’t anticipated it would be so bad.

The problem is that while audio data are relatively small and easy to shuttle around, video is not. “Audio is small and video is huge,” says Simmons. “How can you do it? It’s like balancing an elephant against a fly.”

That balancing act was their goal for this academic year. They set aside the trucks full of equipment they’d used for the spring concert and started from scratch on the video problem. Their HQ today consists of little more than an off-the-shelf desktop computer, an oscilloscope, a couple of cardboard boxes and a tangle of wires running back and forth. With this, they’ve begun to port a very stripped-down video image — no color, just enough focus and resolution to give musicians the visual cues they need to stay in time.

“We’re up to the Half- Blood Prince,” Simmons says, referring to the sixth in the seven-part Potter series.

Even if they reach the Deathly Hallows milestone, however, they may have to wait around for broadband to catch up in order for this technology ever to come together. The multi-gigabit network they run on works in the Inland Northwest and runs directly to Seattle, but they have to use Seattle as their jumping-off point to get anywhere else, as this kind of connectivity is still so rare. They’re now exploring the idea of an audio/video demonstration performance with musicians in Portland or Seattle or Utah next spring. But they’re still a long way from Paris, Dakar, Beijing.

“Many people think it’s impossible, but I totally do not think that,” Simmons says with a smile. “I’m absolutely sure in my heart and mind that it’s absolutely possible, and it’s gonna happen.”

Even if all they ultimately accomplish is allowing bands to practice in a separate room from their malodorous drummer, that’s not nothing. Musicians the world over will thank MANOME for that.